A magazine where the digital world meets the real world.

On the web

- Home

- Browse by date

- Browse by topic

- Enter the maze

- Follow our blog

- Follow us on Twitter

- Resources for teachers

- Subscribe

In print

What is cs4fn?

- About us

- Contact us

- Partners

- Privacy and cookies

- Copyright and contributions

- Links to other fun sites

- Complete our questionnaire, give us feedback

Search:

Video Google

Google-like search engines have been massively successful. Trouble is you have to use text to search. In the meantime the world has gone multimedia. The web is now full of images and movies. Youtube has 70 million videos. Flickr has 2 billion images with a million more added every day. It isn't just the web. Even searching personal photo collections can already be daunting - and photos only went digital a few years ago!

The problem's are increasingly becoming everyday ones. Just as googling has become normal activity (how did we manage before?) so much more will be possible if the problems of searching images can be cracked. Professor Andrew Zisserman's Visual Geometry research team at Oxford University have some solutions.

First, let's look at some everyday scenarios. Suppose you do the PR for a company selling a revolutionary new camera design, and have done a product placement deal. The latest Bond film will feature the new camera, either in the hands of characters or in other ways like on advertising hoardings in the background. The deal is that it will appear at least 20 times in the final cut of the film. You have paid a lot of money. Now you want to be able to check that it really does happen. How do you do it? Is the only way to sit someone down in front of the film and count. What if they counted 19? Perhaps they just got too engrossed in the film and missed one! Why can't the computers help?

Here is another scenario. Suppose you run a successful webzine. You take great pride in using stunning images and need an image to illustrate a story about an iPod. Trouble is you know you took a perfect image a couple of years back. Fine except you have tens of thousands of photos and you can't remember when you took it. You can't just search for the word 'iPod' because that would only have worked if every time you took a photo you annotated it with good descriptions. You don't do that at all. Life is too short. How do you find that photo?

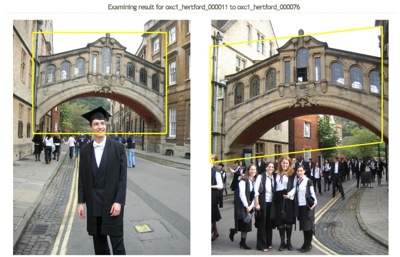

Here is a more futuristic scenario (actually not that futuristic anymore, but that is another story). Suppose you are now one of the millions of people who wear a video button. It records everything you see and do and saves it forever. All the time. It means you never need to forget an experience. You are now standing in front of the Bridge of Sighs in Oxford with your boyfriend. You claim you've both been here before. He says it must have been someone else. Exactly the kind of argument the video button should solve...but how do you rewind to that particular video memory given you don't remember when it was?

What you need in all these cases is a way to 'Google' images...but not the current Google image way. That isn't really searching images at all. There you type in text to search for and it needs someone to have previously labelled the image with the text you are searching for somehow. That isn't good enough for our tasks. We want to find images when there is no text in sight, just images. Wouldn't it be good to Google images by giving an image of an object (like the Camera, the iPod or the bridge) and the search engine just returns all the scenes or images that match - in order of how well they match? How could we design a system to do that? How would it work?

Bridge? What Bridge?

There are three different but related problems you could want to solve if searching for images using images in this way. You could, given a picture of a bridge want images of a bridge, any bridge. Alternatively you could be looking for a near duplicate version of that actual image. Perhaps you have the low resolution version and want the original high quality version. A third possibility is you could want to find different photos containing that same object. You just want images of the Bridge of Sighs in Oxford.

The last option might sound easy at first - can't you just cut out the object you are interested in and have the computer check if it matches any portion of each image in the collection being searched, pixel by pixel? Well no. In the other images, it won't be at exactly the same angle. The size will be different: it might be a tiny object in the background. If taken at a different time, the light will have changed. There might even be other objects or people in front of it, partially hiding it.

The Oxford team set out to answer two questions. Is it possible to use a similar approach to the Google text searching methods to search for objects in images? Secondly, can you retrieve all occurrences of an object from an image collection or video?

Find that word

How do search engines search web pages so quickly? If you think about it, it is amazing. You can type in any arbitrary word and Google can search the whole of the web for it instantly! How on earth is it done so quickly?

Search engines send out web crawling programs that go from page to page, taking a copy of the pages and processing them. A first thing they can do is called 'stemming'. This means chopping down words to their roots. If 'walks', 'walking' and 'walked' are all converted to 'walk' they can be treated as though they were all the same. Common words like 'the', 'of' and 'a' are also thrown away as uninteresting. Finally what is stored back at base is an 'inverted file'. That just means that each word found is stored with a list of all the places it appears. The process is basically the same as creating the index for a book. The final index is an inverted version of the book - each word is followed by a list of page numbers where it appeared. For web pages, the web addresses are stored rather than page numbers. Google, Yahoo et al each stores a gigantic inverted version of the web on its servers. All this work has been done off-line long before you ever did a search. When you do a simple search, all that is needed is to look up the word in this vast index and return the web addresses.

Spot the Visual Word

If we are going to do something similar for images, then we need some image equivalent of words to search for. Pictures of whole objects don't work because as we saw they change too much. What does work is using small patches of images. You have to choose the patches with care. They need to be interest points with distinctive texture. Essentially that just means there is a distinctive pattern of edges in the patch. Choosing interesting patches is a bit like the way google only stores interesting words for text search.

You don't just use the patch of image as the 'word' though. You turn it into a series of numbers recording things about the texture. In the Oxford system this involves recording 128 numbers for each patch. Storing these properties of the patch rather than the image itself means we don't have to worry about corresponding patches on different images being different sizes or orientations. Magnify or rotate the patch and it will still give the same 128 numbers.

We now have the basis of our 'visual words'. There is one more step though, a little similar to stemming words. Patches with very similar sets of numbers are grouped together into a single point. How do we decide they are similar? Suppose it was just 3 numbers not 128. The numbers would represent a point in space. Similar sets of numbers are those that cluster together in space. The idea is just the same but in a 128 dimensional space (OK - so that is hard to imagine in reality, but scientists think of multi-dimensional spaces all the time). Points that cluster together in the 128 dimensional space are treated as a single point. We now have our visual words to search for.

Suppose we are searching for iPods in our photo collection. Before we start the system will have calculated all the visual words on every photo. This might be a thousand or more visual words for each one - a thousand or more patches representing the interesting areas of that image. Those are stored as an inverted file. Remember that is just an index allowing you to look up any visual word that appears anywhere and gives a list of all the photos it occurs in.

Now we start with any picture containing an iPod and select an area round the iPod so that it is the only part of the image that we search for. The system first works out what all the visual words in that area are. It then looks them up in its big index and returns all the images with matches. The more patches that match, the closer the fit.

With text, the search process might stop there. With an image there is more you can do though. Images have structure - even if an object is at a different angle, or a different size, the relative positions of patches on the same object will stay the same. Your eyes don't jump to the same side of your nose just because you turn your head! The images that contain the same object will therefore not just have matching visual words, but those visual words will also keep in similar relative positions. Put the images side by side and suppose the second is just smaller or shifted. If you draw lines between the corresponding patches and they will not, on the whole, cross. Those that do are just similar looking patches on something different, so can be ruled out.

You are left with a set of pictures from your collection that contain a match with the iPod. They can be ranked, for example, by the number of matches left. Go through quickly checking it's not a false match, counting them and you know how many times the iPod appeared.

A video is just a sequence of still images. Finding the camera in the Bond film is therefore really just the same problem, solved in the same way. One improvement is that in films, the stills are grouped into scenes where not that much changes from one to the next. Single 'key frames' images can be used to represent whole sequences. Of course you can just as quickly search for any other object once the index has been made - all the scenes where James Bond's bow tie appears perhaps, or the ones with Blofeld's cat in.

By bringing together techniques from computer vision and information retrieval research, the Oxford team have shown you can search images in the way current search engines search text. It should therefore scale to gigantic collections in the same way that Google can search all the vastness that is the web. Their experiments have also shown you can pull out all the occurrences of a specific object in this way. What next? Well, there are lots of ways that might improve the system further. The visual words take no account of colour, for example. The system can currently only search for objects. What about actions: a search for images of 'running' or 'eating' perhaps. Can that be done in a similar way?

There is lots more research to be done but the system is already impressive - and you can try it out for yourself - both searching films and searching photo collections. You might just be amazed at how fast you can find an object in a film not to mention how accurately it does it...and it is all down to some very clever algorithms and a lot of research.

Oh! And if you really were filming your whole life you could of course do a video google search to find all the times you had stood looking at the Bridge of Sighs and see who was with you...though perhaps somethings are better left unchecked...just in case.

This article is based on a seminar Andrew Zisserman gave at Queen Mary, University of London, about his work with Ondrej Chum, Michael Isard, James Philbin and Josef Sivic.