A magazine where the digital world meets the real world.

On the web

- Home

- Browse by date

- Browse by topic

- Enter the maze

- Follow our blog

- Follow us on Twitter

- Resources for teachers

- Subscribe

In print

What is cs4fn?

- About us

- Contact us

- Partners

- Privacy and cookies

- Copyright and contributions

- Links to other fun sites

- Complete our questionnaire, give us feedback

Search:

3D models in motion

by Paul Curzon, Queen Mary University of London

based on a 2016 talk by Lourdes Agapito

The cave paintings in Lascaux, France are early examples of human culture from 15,000 BC. There are images of running animals and even primitive stop motion sequences - a single animal painted over and over as it moves. Even then, humans were intrigued with the idea of capturing the world in motion! Computer scientist Lourdes Agapito is also captivated by moving images. She is investigating whether it’s possible to create algorithms that allow machines to make sense of the moving world around them just like we do. Over the last 10 years her team have shown, rather spectacularly, that the answer is yes.

People have been working on this problem for years, not least because the techniques are behind the amazing realism of CGI characters in blockbuster movies. When we see the world, somehow our brain turns all that information about colour and intensity of light hitting our eyes into a scene we make sense of - we can pick out different objects and tell which are in front and which behind, for example. In the 1950s Psychophysics researcher Gunnar Johansson showed some of how our brain does this. He dressed people in black with lightbulbs fastened around their bodies. He then filmed them walking, cycling, doing press-ups, climbing a ladder, all in the dark ... with only the lightbulbs visible. He found that people watching the films could still tell exactly what they were seeing, despite the limited information. They could even tell apart two people dancing together, including who was in front and who behind. This showed that we can reconstruct 3D objects from even the most limited of 2D information when it involves motion. We can keep track of a knee, and see it as the same point as it moves around. It also shows that we use lots of 'prior' information - knowledge of how the world works - to fill in the gaps.

Shortcuts

Film-makers already create 3D versions of actors, but they use shortcuts. The first shortcut makes it easier to track specific points on an actor over time. You fix highly visible stickers (equivalent to Johansson's light bulbs) all over the actor. These give the algorithms clear points to track. This is a bit of a pain for the actors, though. It also could never be used to make sense of random YouTube or CCTV footage, or whatever a robot is looking at.

The second shortcut is to surround the action with cameras so it's seen from lots of angles. That makes it easier to track motion in 3D space, by linking up the points. Again this is fine for a movie set, but in other situations it's impractical.

A third shortcut is to create a computer model of an object in advance. If you are going to be filming an elephant, then hand-create a 3D model of a generic elephant first, giving the algorithms something to match. Need to track a banana? Then create a model of a banana instead. This is fine when you have time to create models for anything you might want your computer to spot.

It is all possible for big budget film studios, if a bit inconvenient, but it's totally impractical anywhere else.

No Shortcuts

Lourdes took on a bigger challenge than the film industry. She decided to do it without the shortcuts: to create moving 3D models from single cameras, applied to any traditional 2D footage, with no pre-placed stickers or fixed models created in advance.

When she started, a dozen or so years ago, making any progress looked incredibly difficult. Now she has largely solved the problem. Her team's algorithms are even close to doing it all in real time, so making sense of the world as it happens, just like us. They are able to make really accurate models down to details like the subtle movements of their face as a person talks and changes expression.

There are several secrets to their success, but Johansson's revelation that we rely on prior knowledge is key. One of the first breakthroughs was to come up with ways that individual points in the scene like the tip of a person's nose could be tracked from one frame of video to the next. Doing this well relies on making good use of prior information about the world. For example, points on a surface are usually well-behaved in that they move together. That can be used to guess where a point might be in the next frame, given where others are.

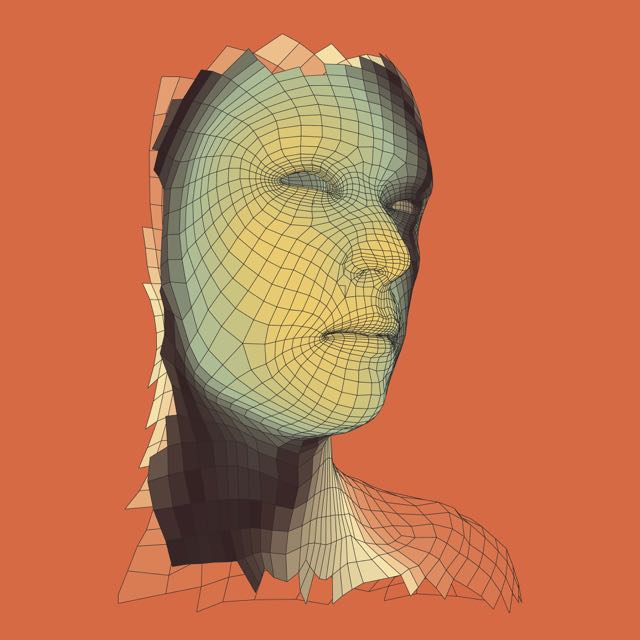

The next challenge was to reconstruct all the pixels rather than just a few easy to identify points like the tip of a nose. This takes more processing power but can be done by lots of processors working on different parts of the problem. Key to this was to take account of the smoothness of objects. Essentially a virtual fine 3D mesh is stuck over the object - like a mask over a face - and the mesh is tracked. You can then even stick new stuff on top of the mesh so they move together - adding a moustache, or painting the face with a flag, for example, in a way that changes naturally in the video as the face moves.

Once this could all be done, if slowly, the challenge was to increase the speed and accuracy. Using the right prior information was again what mattered. For example, rather than assuming points have constant brightness, taking account of the fact that brightness changes, especially on flexible things like mouths, mattered. Other innovations were to split off the effect of colour from light and shade.

There is lots more to do, but already the moving 3D models created from youtube videos are very realistic, and being processed almost as they happen. This opens up amazing opportunities for robots; augmented reality that mixes reality with the virtual world; games, telemedicine; security applications, and lots more. It's all been done a little at a time, taking an impossible-seeming problem and instead of tackling it all at once, solving simpler versions. All the small improvements, combined with using the right information about how the world works, have built over the years into something really special.