A magazine where the digital world meets the real world.

On the web

- Home

- Browse by date

- Browse by topic

- Enter the maze

- Follow our blog

- Follow us on Twitter

- Resources for teachers

- Subscribe

In print

What is cs4fn?

- About us

- Contact us

- Partners

- Privacy and cookies

- Copyright and contributions

- Links to other fun sites

- Complete our questionnaire, give us feedback

Search:

How to get noticed

Hollywood legend has it that the 1950s film beauty Lana Turner was discovered while sitting in a diner having a milkshake. All it took was for the right person to notice her and stardom beckoned. What attracts our eye though? What makes something stand out from its surroundings? Sharp-eyed computer scientists at Queen Mary, University of London have found a piece of the puzzle, and not only does it tell us something about how the brain works, their findings could be used in applications from robotics to spot-the-difference puzzles.

Milan Verma and Peter McOwan have made a mathematical model for measuring the noticibility of parts of an image – what’s called ‘visual saliency’. It turns out that areas of a picture stand out if they have a high colour contrast, or if they go from bright to dark. Also, areas with more detail stand out more than parts with less. At least that’s what catches humans’ eyes. How do you train a computer to find the same things?

The computer makes a ‘saliency map’ of the image – it figures out just how noticeable each section is by comparing it to the section next to it, working like the retina in your eye. It gives each section a score based on colour, brightness and orientation. The sections with the highest score go down on the map as the most noticeable. That gives you a good way to test whether your map is correct – just give someone the image you’ve mapped. Their eyes will snap right to the most salient part of the image. If their eyes agree with the computer you’re in business. You should also be able to track the person’s eyes as they go to the next most salient bit, and the next, and so on.

Milan and Peter’s work is helping to change our understanding of how the brain finds things in an image – or at least, it’s putting a nail in the coffin of a once-favoured theory. Scientists used to think that there were two ways for the brain to notice things, and it used them at different times. When there was an obvious standout bit in a picture, the brain took in the whole image at the same time and spotted the salient bit immediately. When things weren’t so obvious, another approach took over in which the eye started in one corner of the picture and proceeded to check the whole thing in rows, as though it were ploughing a field.

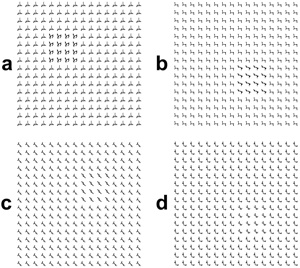

Can you spot the square inside the square? 'A' should be pretty easy but each one gets more difficult until 'D' is very tough.

There was a problem with that theory, though – it was for two different extreme situations. What happened when something was in the middle, neither really obvious nor really hidden? Where did the brain switch between methods? Well, Milan and Peter’s program let them create images that were right in that troublesome middle ground. They found that there wasn’t a switchover point. The brain uses the same approach no matter how noticeable any part of the image is. It just takes a little longer to spot things if they’re not as obvious.

Armed with an understanding of how the brain notices things, you can then use that understanding for other things. One of them could be making spot-the-difference pictures. You would know exactly which changes would be hard to find and which ones would be a piece of cake. On the more serious side, you could use it to teach a robot how to notice things. Humans know how to filter what it sees into the most noticeable things, but how could a robot know what was important about its surroundings? Now we might be able to give it some clues.

There’s another application for Milan and Peter’s work, and it’s a little artistic. Remember how one of the things that makes something noticeable is how detailed it is? It turns out that painters know that by instinct. The bits of a painting that you’re meant to notice are also the ones that have the most detail in them. Your eye goes right to the most important part. Milan and Peter’s program has a ‘painter’s algorithm’ built in that can turn a photo into a painting, using broader brushstrokes in the parts that are less important and finer ones where your eye is meant to go.

Now that we know more about what makes things stand out, maybe we can assume that if you are going to hang out sipping milkshakes and hoping to become a film star, it would help to be wearing a high-contrast, colourful dress with lots of detail. Looking like Lana Turner probably couldn’t hurt either.